I.What is InfiniBand Network?

InfiniBand, often abbreviated as “IB”, is a network communication standard and one of the protocols implementing RDMA (Remote Direct Memory Access) technology. It utilizes high-speed differential signaling technology and multi-channel parallel transmission mechanisms. Its primary goals are to provide "high performance, low latency, and high reliability".

InfiniBand is an interconnect technology dedicated to high-performance computing (HPC) in the server domain. It features extremely high throughput and exceptionally low latency, used for data interconnection between computers (e.g., replication, distributed workloads). InfiniBand is also employed as a direct or switched interconnect between servers and storage systems (like SANs and direct-attached storage), as well as between storage systems themselves. Additionally, it facilitates communication between servers and networks (such as LANs, WANs, and the Internet). It is widely used in data centers and HPC/storage fields. Subsequently, with the rise of artificial intelligence, it has become the network interconnect technology of choice for connecting GPU servers.

II. The Development History of InfiniBand

In the early 1990s, Intel pioneered the introduction of the PCI bus design into the standard PC architecture to support the growing number of external devices. However, as CPUs, memory, hard drives, and other components rapidly upgraded, the slower evolution of the PCI bus became a bottleneck for the entire system. To address this issue, IT industry giants including Compaq, Dell, HP, IBM, Intel, Microsoft, and Sun, along with over 180 other companies, jointly founded the **IBTA (InfiniBand Trade Association)**.

The purpose of IBTA was to research new alternative technologies to replace PCI and solve its transmission bottleneck problem. Consequently, in 2000, the **InfiniBand Architecture Specification version 1.0** was officially released. It introduced the RDMA protocol, offering lower latency, greater bandwidth, higher reliability, and enabling significantly more powerful I/O performance, establishing it as a new standard for system interconnect technology.

Speaking of InfiniBand inevitably leads to an Israeli company – **Mellanox** (Chinese name: , easily remembered as - "selling screws"). Founded in Israel in May 1999 by several former employees of Intel and Galileo Technology, Mellanox joined the InfiniBand industry alliance soon after its establishment. In 2001, they launched their first InfiniBand product.

In 2002, the InfiniBand camp faced a major upheaval. Intel "abandoned ship," deciding to shift its development focus to **PCI Express (PCIe)**, which was launched in 2004. Another giant, Microsoft, also withdrew from InfiniBand development. Although companies like Sun and Hitachi remained committed, the future of InfiniBand was overshadowed.

Starting in 2003, InfiniBand pivoted towards a new application domain: "computer cluster interconnection". In 2005, it found another new application: "storage device connectivity". After 2012, driven by the continuous growth in High-Performance Computing (HPC) demands, InfiniBand technology surged forward, steadily increasing its market share.

As InfiniBand technology gradually rose to prominence, Mellanox also grew and became the "market leader" in InfiniBand. In 2010, Mellanox merged with Voltaire, leaving Mellanox (acquired by NVIDIA in 2019) and QLogic (acquired by Intel in 2012) as the primary InfiniBand suppliers.

In 2013, Mellanox acquired silicon photonics technology company Kotura and parallel optical interconnect chip maker IPtronics, further solidifying its industry portfolio.

In 2015, InfiniBand technology's share in the "TOP500" supercomputer list surpassed 50% for the first time. This marked InfiniBand's initial overtaking of Ethernet technology, becoming the "preferred cluster interconnect technology for supercomputers".

By 2015, Mellanox held an "80% share" of the global InfiniBand market. Their business scope had expanded from chips to encompass the entire spectrum: network adapters, switches/gateways, remote communication systems, and cables/modules, establishing them as a world-class network provider.

In 2019, NVIDIA made a significant move, acquiring Mellanox for "$6.9 billion". Jensen Huang, NVIDIA's CEO, stated: "This is the union of two world-leading companies in high-performance computing. NVIDIA focuses on accelerated computing, while Mellanox focuses on interconnect and storage." In hindsight, NVIDIA demonstrated remarkable foresight: large model training heavily relies on high-performance computing clusters, and InfiniBand networks are the "optimal partner" for such clusters.

III. How InfiniBand Works

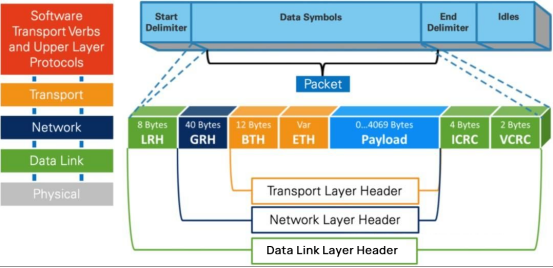

The working principles of InfiniBand might seem complex for those outside networking specialties. Beginners can grasp the basics or skip this section. The InfiniBand protocol also adopts a "layered architecture", with each layer being independent and providing services to the layer above it.

Physical Layer: Defines how bit signals are assembled into symbols on the physical link, then into frames, data symbols, and data fill between packets. It details signaling protocols for constructing valid packets, etc.

Link Layer: Defines the format of data packets and the protocols for packet operations, such as flow control, routing, encoding, decoding, etc.

Network Layer: Performs routing by adding a 40-byte Global Route Header (GRH) to the packet. During forwarding, routers perform only a variable CRC check, ensuring "end-to-end data transmission integrity".

Transport Layer:Delivers packets to a specific Queue Pair (QP) and instructs the QP on how to process the packet. InfiniBand network transport employs Credit-Based Flow Control (CBFC) technology to ensure data transmission reliability and efficiency. This mechanism manages credit (representing the data volume the receiver can accept) between sender and receiver to prevent packet loss and congestion.

The QP (Queue Pair) is the fundamental communication unit in RDMA technology. It consists of a pair of queues: the SQ (Send Queue) and the RQ (Receive Queue). When users call APIs to send or receive data, they essentially place the data into the QP. The requests in the QP are then processed one by one in a polling manner.

The advantages of "CBFC" technology can be summarized in three main points:

1. Avoids Congestion: Through dynamic credit adjustment and lossless transmission, CBFC effectively prevents network congestion and packet loss.

2. Improves Efficiency:The sender can continuously transmit data without waiting for acknowledgments until the credit is exhausted, thereby enhancing data transfer efficiency.

3.Auto-configuration:The flow control mechanism automatically activates upon physical installation of InfiniBand devices, requiring no manual user configuration.

As evident, InfiniBand defines its own Layer 1-4 (Physical, Link, Network, Transport) formats, constituting a complete network protocol. End-to-end flow control is the foundation for sending and receiving InfiniBand network packets, enabling the realization of a highly effective lossless network.

Of course, achieving InfiniBand's high-speed, lossless network also relies on technologies and features like Socket Direct, Adaptive Routing, Subnet Manager (SM) for subnet management, network partitioning, and the SHARP (Scalable Hierarchical Aggregation and Reduction Protocol) engine for network optimization. Together, these components deliver its hallmark high performance, low latency, and easy scalability.